Question 19

- (Exam Topic 5)

You are designing an anomaly detection solution for streaming data from an Azure IoT hub. The solution must meet the following requirements: Send the output to an Azure Synapse.

Send the output to an Azure Synapse. Identify spikes and dips in time series data.

Identify spikes and dips in time series data. Minimize development and configuration effort.

Minimize development and configuration effort.

Which should you include in the solution?

Correct Answer:C

Anomalies can be identified by routing data via IoT Hub to a built-in ML model in Azure Stream Analytics Reference:

https://docs.microsoft.com/en-us/learn/modules/data-anomaly-detection-using-azure-iot-hub/ https://docs.microsoft.com/en-us/azure/stream-analytics/azure-synapse-analytics-output

Question 20

- (Exam Topic 5)

You have an Azure SQL database named sqldb1.

You need to minimize the possibility of Query Store transitioning to a read-only state. What should you do?

Correct Answer:A

The Max Size (MB) limit isn't strictly enforced. Storage size is checked only when Query Store writes data to disk. This interval is set by the Data Flush Interval (Minutes) option. If Query Store has breached the

Maximum size limit between storage size checks, it transitions to read-only mode. Reference:

https://docs.microsoft.com/en-us/sql/relational-databases/performance/best-practice-with-the-query-store

Question 21

- (Exam Topic 5)

You have an Azure SQL database named db1 on a server named server1. You use Query Performance Insight to monitor db1.

You need to modify the Query Store configuration to ensure that performance monitoring data is available as soon as possible.

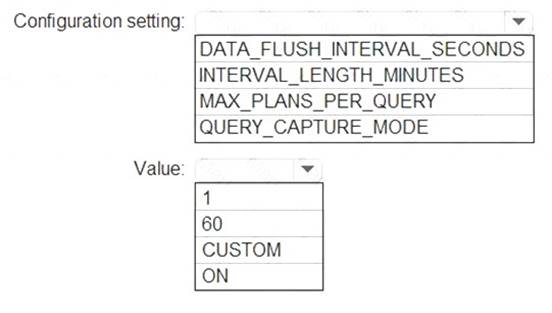

Which configuration setting should you modify and which value should you configure? To answer, select the appropriate options in the answer area.

NOTE:Each correct selection is worth one point.

Solution:

Graphical user interface, text, application Description automatically generated

Does this meet the goal?

Correct Answer:A

Question 22

- (Exam Topic 1)

You need to implement authentication for ResearchDB1. The solution must meet the security and compliance requirements.

What should you run as part of the implementation?

Correct Answer:E

Scenario: Authenticate database users by using Active Directory credentials.

(Create a new Azure SQL database named ResearchDB1 on a logical server named ResearchSrv01.) Authenticate the user in SQL Database or SQL Data Warehouse based on an Azure Active Directory user: CREATE USER [Fritz@contoso.com] FROM EXTERNAL PROVIDER;

Reference:

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-user-transact-sql

Question 23

- (Exam Topic 5)

You have an on-premises multi-tier application named App1 that includes a web tier, an application tier, and a Microsoft SQL Server tier. All the tiers run on Hyper-V virtual machines.

Your new disaster recovery plan requires that all business-critical applications can be recovered to Azure. You need to recommend a solution to fail over the database tier of App1 to Azure. The solution must provide the ability to test failover to Azure without affecting the current environment.

What should you include in the recommendation?

Correct Answer:D

Reference:

https://docs.microsoft.com/en-us/azure/site-recovery/site-recovery-test-failover-to-azure

Question 24

- (Exam Topic 5)

A company plans to use Apache Spark analytics to analyze intrusion detection data.

You need to recommend a solution to analyze network and system activity data for malicious activities and policy violations. The solution must minimize administrative efforts.

What should you recommend?

Correct Answer:C

Azure HDInsight offers pre-made, monitoring dashboards in the form of solutions that can be used to monitor the workloads running on your clusters. There are solutions for Apache Spark, Hadoop, Apache Kafka, live long and process (LLAP), Apache HBase, and Apache Storm available in the Azure Marketplace.

Note: With Azure HDInsight you can set up Azure Monitor alerts that will trigger when the value of a metric or the results of a query meet certain conditions. You can condition on a query returning a record with a value that is greater than or less than a certain threshold, or even on the number of results returned by a query. For example, you could create an alert to send an email if a Spark job fails or if a Kafka disk usage becomes over 90 percent full.

Reference:

https://azure.microsoft.com/en-us/blog/monitoring-on-azure-hdinsight-part-4-workload-metrics-and-logs/