Question 7

- (Exam Topic 4)

You have an on-premises database that you plan to migrate to Azure.

You need to design the database architecture to meet the following requirements:  Support scaling up and down.

Support scaling up and down. Support geo-redundant backups.

Support geo-redundant backups.  Support a database of up to 75 TB.

Support a database of up to 75 TB. Be optimized for online transaction processing (OLTP).

Be optimized for online transaction processing (OLTP).

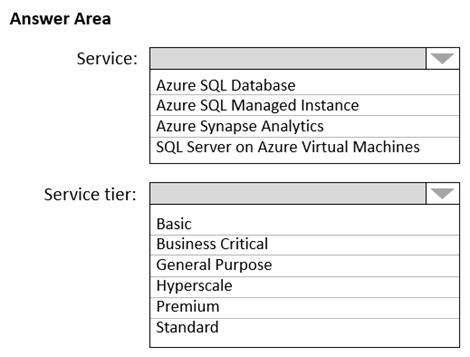

What should you include in the design? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Solution:

Box 1: Azure SQL Database Azure SQL Database:

Database size always depends on the underlying service tiers (e.g. Basic, Business Critical, Hyperscale). It supports databases of up to 100 TB with Hyperscale service tier model.

Active geo-replication is a feature that lets you to create a continuously synchronized readable secondary database for a primary database. The readable secondary database may be in the same Azure region as the primary, or, more commonly, in a different region. This kind of readable secondary databases are also known as geo-secondaries, or geo-replicas.

Azure SQL Database and SQL Managed Instance enable you to dynamically add more resources to your database with minimal downtime.

Box 2: Hyperscale Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/active-geo-replication-overview https://medium.com/awesome-azure/azure-difference-between-azure-sql-database-and-sql-server-on-vm-compar

Does this meet the goal?

Correct Answer:A

Question 8

- (Exam Topic 4)

You have data files in Azure Blob Storage.

You plan to transform the files and move them to Azure Data Lake Storage. You need to transform the data by using mapping data flow.

Which service should you use?

Correct Answer:C

You can use Copy Activity in Azure Data Factory to copy data from and to Azure Data Lake Storage Gen2, and use Data Flow to transform data in Azure Data Lake Storage Gen2.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-data-lake-storage

Question 9

- (Exam Topic 3)

You need to recommend a solution that meets the file storage requirements for App2.

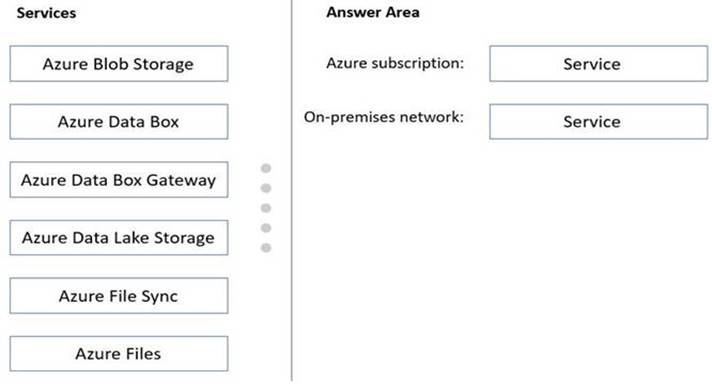

What should you deploy to the Azure subscription and the on-premises network? To answer, drag the appropriate services to the correct locations. Each service may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Solution:

Graphical user interface, application Description automatically generated

Box 1: Azure Files

Scenario: App2 has the following file storage requirements:  Save files to an Azure Storage account.

Save files to an Azure Storage account. Replicate files to an on-premises location.

Replicate files to an on-premises location. Ensure that on-premises clients can read the files over the LAN by using the SMB protocol.

Ensure that on-premises clients can read the files over the LAN by using the SMB protocol.

Box 2: Azure File Sync

Use Azure File Sync to centralize your organization's file shares in Azure Files, while keeping the flexibility, performance, and compatibility of an on-premises file server. Azure File Sync transforms Windows Server into a quick cache of your Azure file share. You can use any protocol that's available on Windows Server to access your data locally, including SMB, NFS, and FTPS. You can have as many caches as you need across the world.

Reference:

https://docs.microsoft.com/en-us/azure/storage/file-sync/file-sync-deployment-guide

Does this meet the goal?

Correct Answer:A

Question 10

- (Exam Topic 4)

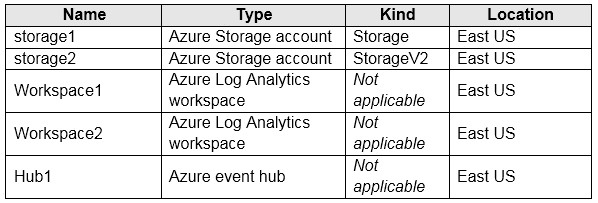

You have an Azure subscription that contains the resources shown in the following table.

You create an Azure SQL database named DB1 that is hosted in the East US region.

To DB1, you add a diagnostic setting named Settings1. Settings1 archives SQLInsights to storage1 and sends SQLInsights to Workspace1.

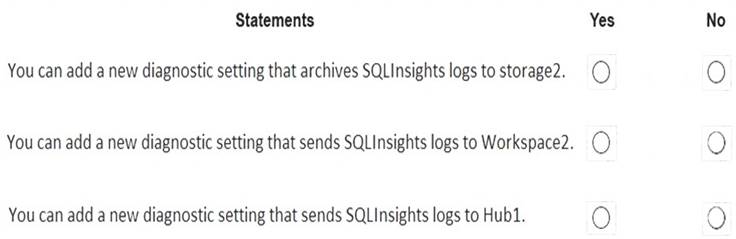

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selections is worth one point.

Solution:

Box 1: Yes

Box 2: Yes

Box 3: Yes

For more information on Azure SQL diagnostics , you can visit the below link

https://docs.microsoft.com/en-us/azure/azure-sql/database/metrics-diagnostic-telemetry-logging-streaming-expo

Does this meet the goal?

Correct Answer:A

Question 11

- (Exam Topic 4)

You store web access logs data in Azure Blob storage. You plan to generate monthly reports from the access logs.

You need to recommend an automated process to upload the data to Azure SQL Database every month. What should you include in the recommendation?

Correct Answer:A

Azure Data Factory is the platform that solves such data scenarios. It is the cloud-based ETL and data integration service that allows you to create data-driven workflows for orchestrating data movement and transforming data at scale. Using Azure Data Factory, you can create and schedule data-driven workflows (called pipelines) that can ingest data from disparate data stores. You can build complex ETL processes that transform data visually with data flows or by using compute services such as Azure HDInsight Hadoop, Azure Databricks, and Azure SQL Database.

Reference:

https://docs.microsoft.com/en-gb/azure/data-factory/introduction

Question 12

- (Exam Topic 4)

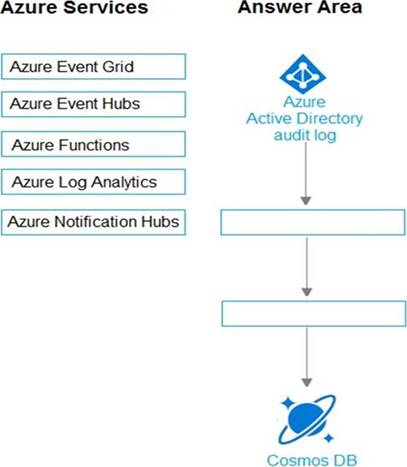

You need to design an architecture to capture the creation of users and the assignment of roles. The captured data must be stored in Azure Cosmos DB.

Which Azure services should you include in the design? To answer, drag the appropriate services to the correct targets. Each service may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Solution:

Diagram Description automatically generated

* 1. AAD audit log -> Event Hub (other two choices, LAW, storage, but not available in this question) https://docs.microsoft.com/en-us/azure/active-directory/reports-monitoring/tutorial-azure-monitor-stream-logs-t

* 2. Azure function has the Event hub trigger and Cosmos output binding

* a. Event Hub trigger for function

https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-event-hubs-trigger?tabs=csharp

Does this meet the goal?

Correct Answer:A